www.industryemea.com

03

'25

Written on Modified on

Taming AI’s data hunger with ultra-efficient, smallest-node technology

Fraunhofer, with TSRI, develops FeMFET-based nanosheet memory under 3 nm, enabling in-memory computing for energy-efficient AI chips in smartphones, cars, and medical devices.

www.fraunhofer.de

As AI and neuromorphic computing demand grows, energy consumption in data centers and edge devices is rising sharply. A major bottleneck lies in moving data between main memory and computing units. A joint German-Taiwanese project addresses this challenge by developing innovative memory technology that enables computing directly within memory, significantly reducing latency and energy use.

Solution: Hafnium Oxide-Based Ferroelectric FETs

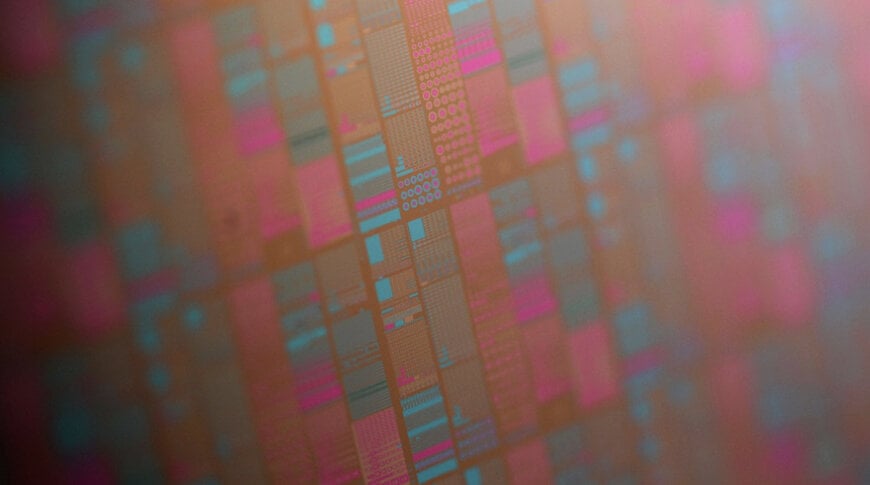

The collaboration focuses on ferroelectric FETs (FeFETs) based on hafnium oxide. Thanks to thin hafnium oxide layers, these components are compatible with modern semiconductor fabrication processes. They operate capacitively rather than resistively, enabling up to 100 times lower energy consumption in embedded systems compared with conventional non-volatile memory. The project aims to establish a 300 mm research line capable of developing memory solutions not only for consumer electronics but also for automotive, industrial, and medical applications.

Added Value: Energy Savings and Enhanced AI Performance

By tightly integrating memory and computing within the same platform, the technology reduces energy consumption while improving speed and efficiency. The German-Taiwanese partnership combines expertise in materials development, high-resolution characterization, and advanced device architectures to create a foundation for next-generation, energy-efficient memory systems. This approach directly addresses critical performance and sustainability challenges in AI hardware and edge computing.

www.ipms.fraunhofer.com