www.industryemea.com

06

'19

Written on Modified on

Deep learning + machine vision = the next-gen inspection

Combining machine vision and deep learning will give companies a powerful mean on both operational and ROI axles. So, catching the differences between traditional machine vision and deep learning, and understanding how these technologies complement each other – rather than compete or replace – are essential to maximizing investments. This article helps clarifying.

Over the last decade, technology changes and improvement have been so much various: device mobility… big data… artificial intelligence (AI)… internet-of-things… robotics… blockchain… 3D printing… machine vision… In all these domains, novel things came out of R&D-labs to improve our daily lives.

Engineers like to adopt and adapt technologies to their tough environment and constraints. Strategically planning for the adoption and leveraging of some or all these technologies will be crucial in the manufacturing industry.

Let’s focus here on AI, and specifically deep learning-based image analysis or example-based machine vision. Combined with traditional rule-based machine vision, it can help robotic assemblers identify the correct parts, help detect if a part was present or missing or assembled improperly on the product, and more quickly determine if those were problems. And this can be done with high precision.

Let’s first see what deep learning is

Without getting too deep (may I say?) in details, let’s talk about GPU hardware. GPUs (graphics processing units) gather thousands of relatively simple processing-cores on a single chip. Their architecture looks like neural networks. They allow to deploy biology-inspired and multi-layered “deep” neural networks which mimic the human brain.

By using such architecture, deep learning allows for solving specific tasks without being explicitly programmed to do so. In other words, classical computer applications are programmed by humans for being “task-specific”, but deep learning uses data (images, speeches, texts, numbers…) and trains it via neural networks. Starting from a primary logic developed during initial training, deep neural networks will continuously refine their performance as they receive new data.

It is based on detecting differences: it permanently looks for alterations and irregularities in a set of data. It is sensitive/reactive to unpredictable defects. Humans do this naturally well. Computer systems based on rigid programming aren’t good at this. (But unlike human inspectors on production lines, computers do not get tired because of constantly doing the same iteration.)

In daily life, typical applications of deep learning are facial recognition (to unlock computers or identify people on photos)… recommendation engines (on streaming video/music services or when shopping at ecommerce sites)… spam filtering in emails… disease diagnostics… credit card fraud detection…

Deep learning technology makes very accurate outputs based on the trained data. It is being used to predict patterns, detect variance and anomalies, and make critical business decisions. This same technology is now migrating into advanced manufacturing practices for quality inspection and other judgment-based use cases.

When implemented for the right types of factory applications, in conjunction with machine vision, deep learning will scale-up profits in manufacturing (especially when compared with investments in other emerging technologies that might take years to payoff).

How does deep learning complement machine vision?

A machine vision system relies on a digital sensor placed inside an industrial camera with specific optics. It acquires images. Those images are fed to a PC. Specialized software processes, analyzes, measures various characteristics for decision making. Machine vision systems perform reliably with consistent and well-manufactured parts. They operate via step-by-step filtering and rule-based algorithms.

On a production line, a rule-based machine vision system can inspect hundreds, or even thousands, of parts per minute with high accuracy. It’s more cost-effective than human inspection. The output of that visual data is based on a programmatic, rule-based approach to solving inspection problems.

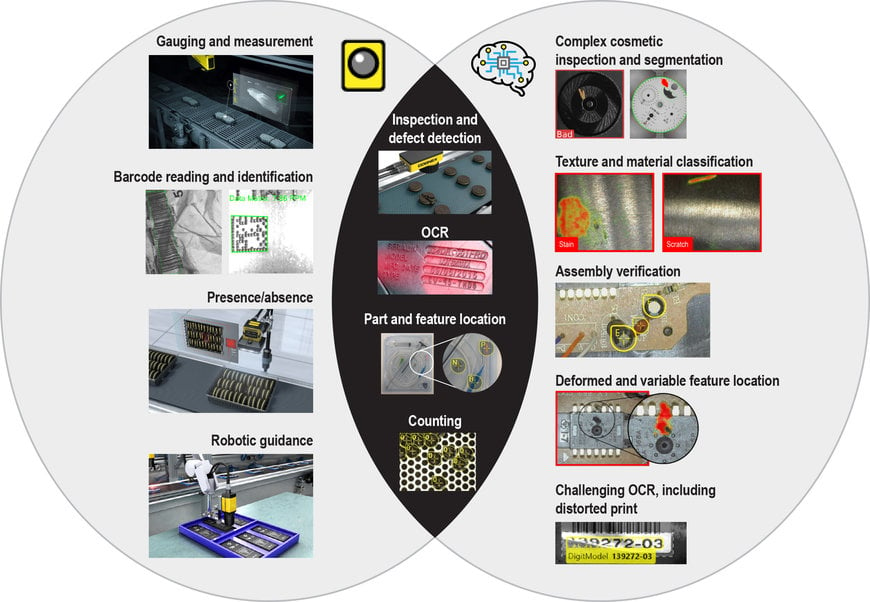

On a factory floor, traditional rule-based machine vision is ideal for: guidance (position, orientation…), identification (barcodes, data-matrix codes, marks, characters…), gauging (comparison of distances with specified values…), inspection (flaws and other problems such as missing safety-seal, broken part…).

Rule-based machine vision is great with a known set of variables: Is a part present or absent? Exactly how far apart is this object from that one? Where does this robot need to pick up this part? These jobs are easy to deploy on the assembly line in a controlled environment. But what happens when things aren’t so clear cut?

This is where deep learning enters the game:

• Solve vision applications too difficult to program with rule-based algorithms.

• Handle confusing backgrounds and variations in part appearance.

• Maintain applications and re-train with new image data on the factory floor.

• Adapt to new examples without re-programming core networks.

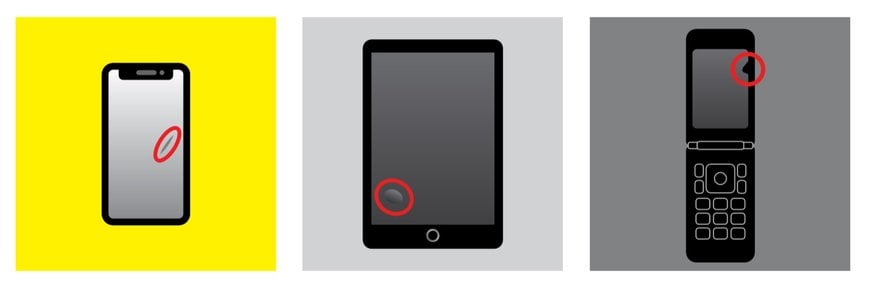

A typical industrial example: looking for scratches on electronic device screens. Those defects will all differ in size, scope, location, or across screens with different backgrounds. Considering such variations, deep learning will tell the difference between good and defective parts. Plus, training the network on a new target (like a different kind of screen) is as easy as taking a new set of reference pictures.

Inspecting visually similar parts with complex surface textures and variations in appearance are serious challenges for traditional rule-based machine vision systems. “Functional” defaults, which affect a utility, are almost always rejected, but “cosmetic” anomalies may not be, depending upon the manufacturer’s needs and preference. And even more: these defects are difficult for a traditional machine vision system to distinguish between.

Due to multiple variables that can be hard to isolate (lighting, changes in color, curvature, or field of view), some defect detections, are notoriously difficult to program and solve with a traditional machine vision system. Here again, deep learning brings other appropriate tools.

In short, traditional machine vision systems perform reliably with consistent and well-manufactured parts, and the applications become challenging to program as exceptions and defect libraries grow. For the complex situations that need human-like vision with the speed and reliability of a computer, deep learning will prove to be a truly game-changing option.

Deep learning’s benefits for industrial manufacturing

Rule-based machine vision and deep learning-based image analysis are a complement to each other instead of an either/or choice when adopting next generation factory automation tools. In some applications, like measurement, rule-based machine vision will still be the preferred and cost-effective choice. For complex inspections involving wide deviation and unpredictable defects—too numerous and complicated to program and maintain within a traditional machine vision system—deep learning-based tools offer an excellent alternative.