How to Configure Quality of Service on Industrial Switches for Optimal Performance

Article by Henry Martel, Field Application Engineer, Antaira Technologies.

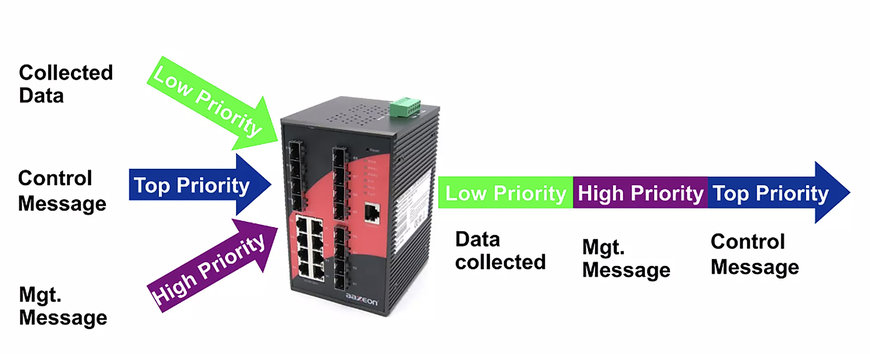

Quality of Service (QoS) provides the traffic prioritization capability to ensure that important data gets consistent, predictable delivery

One of those essential, although frequently misunderstood, features of an industrial Ethernet switch is Quality of Service, or QoS. Essentially, QoS is a set of technologies that prioritizes network resources to an organization’s most important applications, data flows, or users. Sending prioritized packets ahead of other less important traffic guarantees the availability and consistency of network resources for mission critical applications and data integrity during periodic network congestion. Less important traffic is stored by the network administrator using the Quality-of-Service priority queuing mechanism and is eventually sent when bandwidth becomes available.

Link congestion from oversubscribed industrial switch ports was once a problem for networks. Yet as industrial networks deploy more smart devices heavily reliant on their network infrastructure for bandwidth and availability, network administrators are facing similar congestion management issues. As a result, QoS has made its way from IT to OT.

In this article, we discuss how to correctly configure QoS on managed industrial managed switches. By following the steps outlined, you will be able to configure QoS in a way that ensures more reliable, higher performance connectivity on business networks.

Why Quality of Service is Important

Periodic congestion is experienced in all computer networks, large and small. When a router or Ethernet switch is receiving more traffic than it can send out, congestion in network infrastructure occurs, resulting in possible latency and packet loss. A familiar example of congestion is when links are momentarily saturated by a network backup, or at certain times of the workday with high network traffic when employees are running the same application simultaneously.

In a large-scale distributed industrial plant, network congestion is more than a nuisance. It is a threat to productivity and profits. Multiple networked systems and automated devices must work collaboratively in perfectly timed sequences. Throughout the plant floor, various devices connected IIoT sensors rely on networks to communicate real-time information on operational status or machine maintenance requirements. If starved for bandwidth, all traffic shaping an industrial network will experience latency, causing lags in communicating timing controls and operational status. Even a few milliseconds of latency can lead to extremely expensive problems, ranging from downtime to equipment malfunctions. QoS gives critical data streams precedence within an industrial network, preventing the unwelcome consequences of latency and dropped packets.

QoS is equally useful when enabled for “inelastic” business applications like VoIP, video surveillance network data, online training, and videoconferencing, since they have minimum bandwidth requirements, maximum latency limits, minimal latency limits, and high sensitivity to jitter. QoS designates priority to the appropriate packets and strategically allocates bandwidth to ensure the best user experience. Employees depend on these services to get their jobs done, and poor QoS leads to poor work quality.

QoS Models

The three different implementation models for Quality of Service are: Best Effort, IntServ, and Diffserv. While Best Effort is the simplest QoS approach, it can lead to slower speed and less reliable connections on busier networks. The more advanced QoS models “Differentiated Services” (DiffServ) and “Integrated Services” (IntServ) are essential in industries where reliable communication of network data across enterprise networks is crucial, as is the case with factory automation, Intelligent Traffic Systems, or Smart Cities.

Best effort: On a network where QoS is not enabled, data, traffic flows, and packet loss of transmissions are based on “Best Effort” delivery, meaning all transmitted data has an equal probability of being delivered and dropped. This First-in, First-out architecture of high speed of data transmission and even packet loss distribution is suitable for non-critical data flows or traffic.

IntServ: IntServ utilizes the Resource Reservation Protocol (RSVP) to the existing network bandwidth, to provide end-to-end QoS and bandwidth management for real-time applications with bandwidth, delay, and packet loss requirements to achieve predictable and guaranteed service levels. Call Admission Control (CAC) prevents other IP traffic from using the reserved bandwidth. The downside is that any bandwidth reserved that is not used by an application is a wasted resource.

DiffServ: DiffServ QoS resolves the shortcomings of InServ and Best Effort models. It classifies IP into specific traffic flows, and marks it based on QoS requirements. Every traffic flow class is assigned a different level of service. Compared to IntServ, DiffServ can handle a larger number of traffic flow classes with relatively simple configuration that requires less administrative control. DiffServ has largely replaced IntServ in modern industrial networks.

DiffServ architecture specifies that each packet is classified upon entering the network and processed before exiting it. Depending on the type of traffic being transmitted, the classification will be done inside the layer 2 packet header or layer 3 packet header. In layer 2, the marking is Class of Service (Cos), following 802.1p CoS specifications that categorize traffic from a low to high priority. Layer 3 packets use Differentiated Services Code Point (DSCP) that categorizes priority as Assured Forwarding, Expedited Forwarding, and Best Effort. This is similar to CoS but DSCP also includes a drop probability assigned to each class of low, medium or high. CoS and DSCP markings will let industrial switches know what class the traffic is assigned to and what actions to take.

Configuration of QoS

Configuring the QoS mechanisms on industrial switches can be a complex process with many concepts beyond the scope of this article. Notwithstanding that, the following truncated steps can help ensure that your switch QoS is set up correctly.

Step 1: Identify the Critical Applications

The first step in configuring end-to-end QoS technologies on industrial switches is to perform an assessment of your network to identify those applications that require high priority. These will include high bandwidth and-heavy industrial control systems, video streaming, and VoIP, among others, that may deteriorate during periods of congestion. A best practice will involve the leaders from every business department, not just network administrators, in deciding which applications are top priorities.

Step 2: Configure Traffic Classes

After identifying your high priority applications, the next step is to configure traffic classes on the network switch. A traffic class is a group of packets that share similar characteristics and require similar treatment. For example, a traffic class might be created for audio and video content traffic, another for VoIP voice traffic, and another for all other types of traffic. Three basic QoS class strategies can be deployed, in general, depending on the granularity of applications running on your network:

- 4-Class QoS Model (Voice, signaling, mission-critical data, default)

- 8-Class QoS Model (4-class model plus multimedia conferencing, multimedia video streaming, network the priority access control, and scavenger)

- 12-Class QoS Model (8-class model plus communication services, real-time phone calls, video call interactive, broadcast voice or video calls, management/OAM, bulk data)

Avoid configuring too many Quality-of-Service classes. It is not necessary to create QoS policies for each and every kind of traffic shaping data flow on one network. The fewer classes there are, the easier it will be to deploy and maintain.

Step 3: Assign Priorities

Once the traffic classes have been created, priorities need to be assigned to them. By assigning a priority, the Ethernet switch will know how to handle the traffic class during congestion by reading the packet header’s CoS or DSCP. A priority command will provide a minimum or maximum bandwidth guarantee. Internally, queuing mechanisms permit the less urgent packets to be stored until the network is ready to process.

Step 4: Set Bandwidth Limits

In addition to providing bandwidth and to assigning priorities for data traffic, it is equally important to set bandwidth limits for each traffic class. Each class in traffic types will be assigned a base (guaranteed) and maximum bandwidth. This ensures that high priority traffic does not consume all available bandwidth on the network, leaving little or no usable bandwidth for other types of traffic. Recall that the DiffServ architecture also offers the flexibility to share the unused guaranteed bandwidth of high priority applications with other lower priority applications to further congestion management prevent delays and drops.

Step 5: Test and Fine-Tune

QoS is not a set-it-and-forget-it type project. It is cyclical, ongoing, and requires regular oversight and auditing. After QoS has been configured on the Ethernet switch, it is important to test and fine-tune the settings to ensure optimal network performance. This can involve running network tests to guarantee network performance, determine whether critical applications are receiving the necessary priority and adjusting settings as necessary.

In summary, configuring QoS on managed industrial switches requires: identifying critical applications, configuring the traffic types and classes, assigning priorities, setting bandwidth limits, and testing and fine-tuning the settings to ensure optimal network performance. By following these steps, it is possible to configure and implement QoS on industrial switches in a way that ensures an efficient, well-refined, documented network customized to meet your specific needs.

www.antaira.com